Why Smarter People Might Be More Prone to Irrational Biases

Woo-kyoung Ahn on the Link Between High Levels of Analytical Reasoning Skills and Biased Interpretations

Are some people less susceptible to bias? How about those who are typically considered smart? We might like to think that people who are more intelligent can discern what is right or wrong and apply only relevant knowledge to help them interpret data or judge what they see. Conversely, when we hear that some people react to certain events in a manner completely opposite to how we believe they should, it’s tempting to think of them as less smart than us.

For instance, suppose there is a person who strongly believes that COVID-19 is no more fatal than a regular flu. We might think that only foolish people could believe such a ludicrous theory and treat the deaths of millions of people around the world as “regular” deaths, believing that they were all about to die anyway. But there are plenty of people who have demonstrated intelligence in other parts of their life who parrot this demonstrably false idea.

In fact, smarter people can be even more prone to biased interpretations, because they know more ways to explain away the facts that contradict their beliefs. A seminal study, published in 1979, is probably the most frequently cited in writings about confirmation bias, especially the kind that can lead to political polarization. But the fact that it required elaborate and intelligent efforts on the part of the participants to maintain their bias has not been much commented on, so here are the details.

Undergraduate students were recruited to participate in the study based on their views on capital punishment. Some were supporters of the death penalty, as they believed it deters crimes. Others were opponents of the death penalty. Upon entering the lab, the participants were asked to read the findings from ten studies that examined whether the death penalty increased or decreased crime rates. Half of these (hypothetical) studies showed the deterrent effect, as in this one example:

Kroner and Phillips (1977) compared murder rates for the year before and the year after adoption of capital punishment in 14 states. In 11 of the 14 states, murder rates were lower after adoption of the death penalty. This research supports the deterrent effect of the death penalty.

The other half reported that capital punishment did not deter crime rates:

Palmer and Crandall (1977) compared murder rates in 10 pairs of neighboring states with different capital punishment laws. In 8 of the 10 pairs, murder rates were higher in the state with capital punishment. This research opposes the deterrent effect of the death penalty.

Every time the participants read a study, they were asked to rate changes in their attitudes toward the death penalty.

By this point, readers may expect that I’m about to report the same old confirmation bias: that supporters of the death penalty said they still had positive views of capital punishment, while opponents of the death penalty still had negative views, regardless of what studies they read.

Smarter people can be even more prone to biased interpretations, because they know more ways to explain away the facts that contradict their beliefs.Interestingly, that’s not quite the case. After reading the results of a study showing the deterrent effect, both the supporters and the opponents became more positive about capital punishment. Similarly, both groups became more negative after reading the opposite results. That is, people were affected by new information even if they contradicted their original views. Their initial attitudes moderated how much change was observed—for example, after receiving the deterrence information, proponents become even more positive about capital punishment than opponents did—but it did not prevent people from making some adjustments.

Critically, the study had a second phase. Having gone through only the brief summaries of results, now the participants were asked to read more detailed descriptions of the studies, which fully fleshed out their methodological details, such as how the states for these studies were selected (since U.S. states have different laws), or the length of time the study covered. Participants also learned exactly what the results looked like. These details made a huge difference, because they provided these smart participants with excuses to dismiss the evidence when the results contradicted their original beliefs.

Here are some examples of what the participants said:

The study was taken only 1 year before and 1 year after capital punishment was reinstated. To be a more effective study they should have taken data from at least 10 years before and as many years as possible after.

There were too many flaws in the picking of the states and too many variables involved in the experiment as a whole to change my opinion.

Using such elaborate critiques, they convinced themselves that the studies whose results contradicted their initial beliefs and attitudes were flawed. Not only that, the contradictory results made them even more convinced about their initial position. Supporters of capital punishment became even more positive about capital punishment after having read the details about the studies that undermine the deterrent effects of capital punishment. Similarly, opponents of capital punishment became even more negative about it after having read details about the studies that supported the deterrent effects. That is, evidence that contradicted their original beliefs resulted in even more polarization.

Coming up with excuses to dismiss evidence requires a good amount of analytic thinking skills and background knowledge, like how to collect and analyze data, or why the law of large numbers, covered in chapter 5, is important. When the participants could not apply such sophisticated skills because the study descriptions were so brief, biased assimilation did not occur. But once they had enough information, they could use those skills to find fault with the studies that contradicted their original position, to such a point that findings that were at odds with their beliefs ended up strengthening them.

So-called smart skills do not free people from irrational biases. Sometimes they can exacerbate the biases.This study, however, didn’t directly investigate the individual differences in the reasoning skills of the participants. Another study more directly examined whether individuals at different levels of quantitative reasoning skills differ in biased interpretations. The researchers first measured participants’ numeracy, their ability to reason using numerical concepts. The questions they used to measure numeracy varied in difficulty, but they all required a fairly high level of quantitative reasoning to answer correctly—some just a little more complicated than, say, calculating tips, or computing the price of a pair of shoes during a 30 percent discount sale and some much harder to figure out. Questions like these:

Imagine we are throwing a five-sided die 50 times. On average, out of these 50 throws how many times would this five-sided die show an odd number? (Correct answer: 30)

In a forest, 20 percent of mushrooms are red, 50 percent brown, and 30 percent white. A red mushroom is poisonous with a probability of 20 percent. A mushroom that is not red is poisonous with a probability of 5 percent. What is the probability that a poisonous mushroom in the forest is red? (Correct answer: 50 percent)

Then, participants were presented with some “data” showing a relationship between a new skin cream and a rash. The table below shows what the participants saw. In 223 out of a total of 298 cases (or about 75 percent of the time) when the skin cream was used, the rash got better, and in the remaining 75 cases, the rash got worse. Based on such data, many people would jump to the conclusion that the new skin cream makes the skin condition better.

But remember how I used monster spray and bloodletting to illustrate confirmation bias in chapter 2? Just like we must check what happens when we don’t use monster spray, we also need to look at the cases in which the new skin cream was not used. The data summarized in the table below show that in 107 out of 128 cases (or about 84 percent of the time) when the new skin cream was not used, the rash got better. In other words, according to this data, those with a rash would have been better off not using the skin cream.

Assessing these results correctly is a fairly challenging task, so it makes sense that the higher the participants’ scores were in the numeracy assessment, the more likely they were to get the right answers. And in fact that’s what happened. I should also add that there was no difference between Democrats’ and Republicans’ abilities to make the right assessment. This may seem like an odd thing to mention here, but it was critical to establish, because in another condition of the study, participants received numbers that were identical to those used in the skin cream and rash data, but these were now presented in a politically charged context.

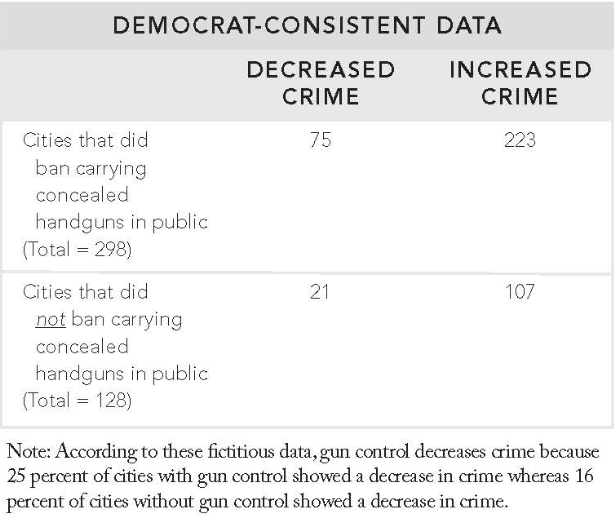

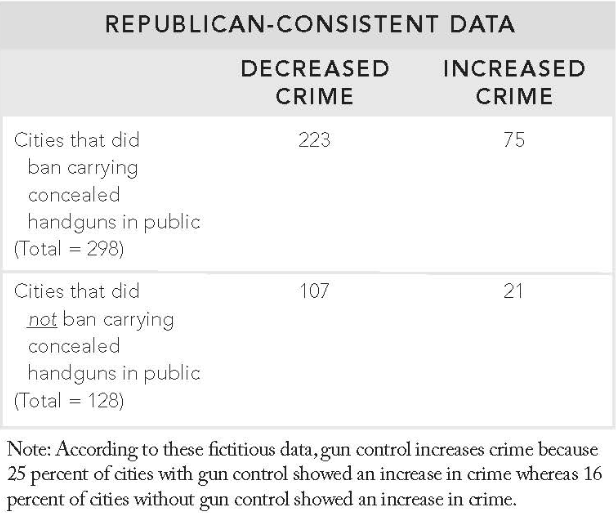

This data was about the relationship between gun control (specifically, prohibiting concealed handguns in public) and crime rates. Two versions of this data were presented: one showed that gun control increased crime, supporting the view held by a majority of Republicans, and the other showed that gun control decreased crime, supporting the view more common among Democrats.

Whether the participants were Democrats or Republicans, those who scored low in numeracy still had trouble getting the correct answers, as with the skin cream and the bloodletting examples; they were at a chance level determining whether gun control increased or decreased crime. At least, their interpretations of the data were not biased. Regardless of whether the data showed gun control as increasing or decreasing crime, Democrats and Republicans with lower numeracy scores were more likely to be incorrect than correct, and there was no difference between Democrats and Republicans, just as with the skin cream version.

Among those with higher numeracy, however, there was a bias. Republicans with higher numeracy were more likely to get it right when the correct answer was that gun control increased crime. Democrats with higher numeracy were more likely to get it right when the correct answer was that gun control decreased crime. That is, people with stronger quantitative reasoning abilities used them only when the data supported their existing views.

I am not trying to say those lacking high levels of quantitative or analytic reasoning skills don’t make biased interpretations. Of course they do. It is highly unlikely that only “smart” people make, for example, snap race-based judgments about whether someone is holding a gun or a cell phone. The point here is that so-called smart skills do not free people from irrational biases. Sometimes they can exacerbate the biases.

__________________________________

Excerpted from THINKING 101 by Woo-kyoung Ahn. Copyright © 2022 by Woo-kyoung Ahn. Excerpted by permission of Flatiron Books, a division of Macmillan Publishers. All rights reserved. No part of this excerpt may be reproduced or reprinted without permission in writing from the publisher.