On a September night in 2017, New York Times tech columnist Farhad Manjoo and his wife were getting ready to sleep when a blood-curdling shriek arose from the bedside.

It was Alexa.

“The voice assistant began to wail, like a child screaming in a horror-movie dream,” Manjoo later recalled. His Twitter followers greeted the news with sarcasm and satirical advice:

“You have an always-on, deep-learning supercomputer node in your house always listening and you are surprised it screams?” wrote one.

“Why voluntarily have CIA spy tech in your home?” asked another.

“If I were you, I’d keep all the network access wires in one place and keep an axe nearby,” advised a third.

Suspicions about Alexa were already running high; a hacker had recently demonstrated how an Echo could be transformed into a wiretap. Over the following months, Alexa’s odd behavior continued. Users reported that she was emitting fits of unprompted laughter. Some called it “creepy” or “witch-like.” Others heard her cackling away in the dark, after they’d gone to bed. Then things got very weird at a home in Portland, Oregon, that had Amazon smart speakers in every room.

“My husband and I would joke and say ‘I bet these devices are listening to what we’re saying,” recalled the woman who lived there. In May 2018, she learned the speakers had done more than listen. They’d actually recorded ambient household chatter—including a conversation about hardwood floors— and emailed audio files of it to one of her husband’s employees. That was especially jarring because Amazon had claimed the Echo, while always listening, only starts recording when triggered by a spoken “wake” command — usually the word “Alexa.

Amazon dismissed the malfunctions as rare glitches. Meanwhile, a public debate ignited on social media. What did it mean that millions of citizens had installed always-on listening devices in their own homes? Did the popularity of the Amazon Echo mark a new frontier in the domestication of surveillance—a panopticon in every parlor?

Beloved for obeying commands to play songs, check the weather, and carry out a litany of other benign tasks, smart speakers suddenly seemed to have darker, less benevolent potential. A New Yorker cartoon transformed the Echo Dot into a sinister hockey puck with a red halo, slanted eyebrows and a nefarious “hahahahahaha” hovering overhead. It had an Amazon smile logo for a mouth. Inside were rows of pointy shark teeth.

Amazon’s patents offer what could be a sneak preview of the future.

The Amazon Echo came to market in November 2014, just a year and a half after Snowden’s revelations began stoking concerns about mass surveillance. Consumers couldn’t get enough of it. By May 2017, Amazon had sold an estimated 10.7 million of the Alexa-powered devices. Overall sales of smart speakers that year—including Google Home, the lagging competitor to Amazon Echo—hit nearly 25 million. Market researchers estimated that in 2020 three-quarters of American homes would have smart speakers and that, by the following year, the number of voice-activated assistants could easily rival earth’s human population.

As Amazon saturates the market with new smart speakers, it also works to expand the capabilities of those it has already sold via software updates. The “smarter” they get, the better such devices become at extracting continuous—and ever-greater—profits from users worldwide. The writer Shoshana Zuboff has referred to this model as “surveillance capitalism,’’ and most ordinary folk have more to fear from it than they do from the NSA. A panopticon in every parlor, after all, is good for business.

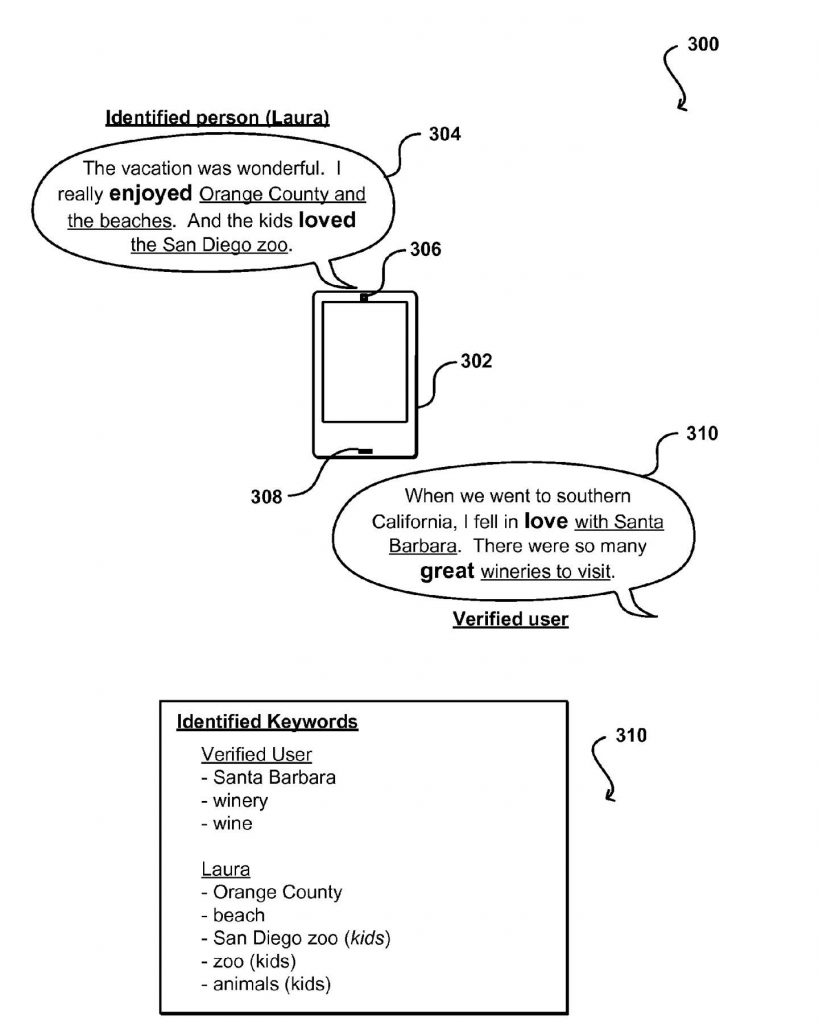

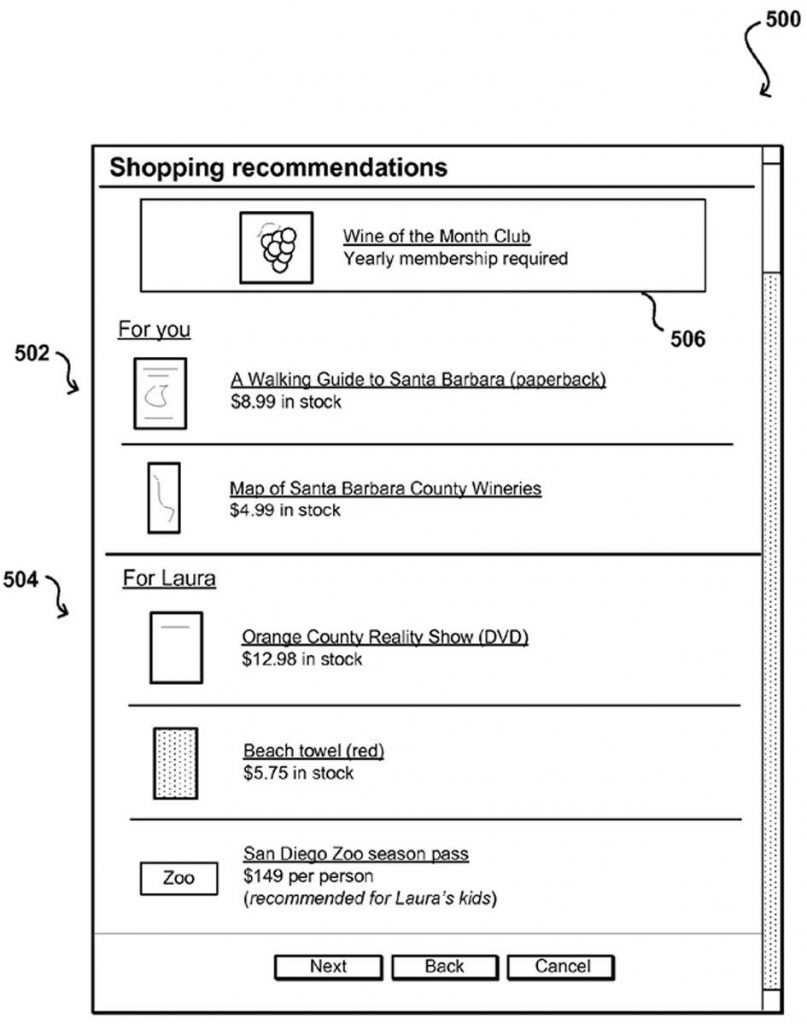

Amazon’s patents offer what could be a sneak preview of the future. They include technologies to mine ambient speech for keywords and share them with advertisers, even in the absence of a “wake” command. If a device overhears you saying, “I like the beach,” for example, you could be targeted with ads for sunblock and towels.

Amazon’s patent diagrams illustrate the process like this:

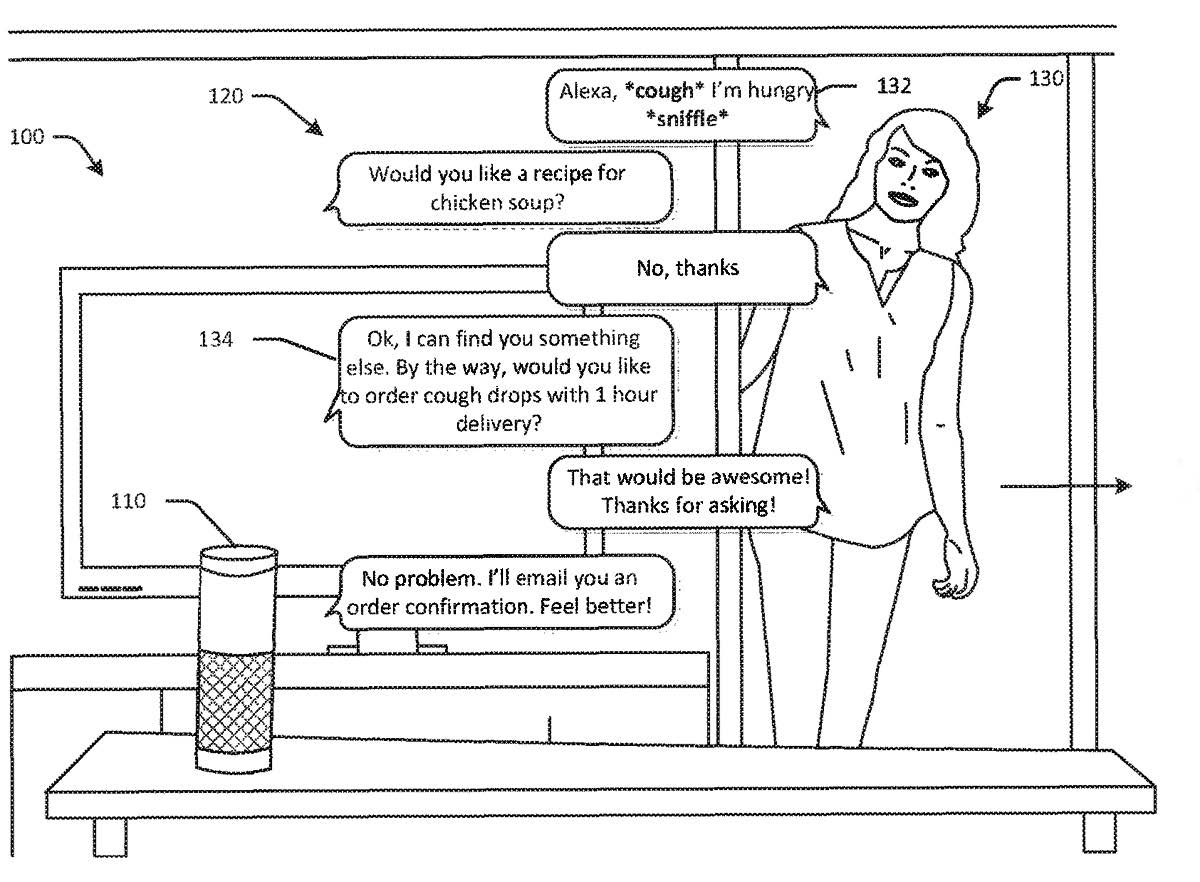

Amazon has also patented a technology that analyzes the human voice to determine, among other things, ethnic origin, gender, age, health, and mental state. “A cough or sniffle, or crying, may indicate that the user has a specific physical or emotional abnormality,” the patent explains. It includes this illustration:

Amazon—along with other tech giants including Google and Facebook—say they only collect personal information for a limited range of commercial purposes. But recent history demonstrates that, once systems are already mining users’ data, sometimes a line gets crossed.

Consider Cambridge Analytica, the political data firm hired by President Trump’s 2016 election campaign. The company illicitly obtained detailed Facebook information on as many as 87 million people whose votes it hoped to sway with targeted political ads. The potential abuse of profile data had long been debated, as everyone from credit card companies to health insurers showed a keen interest in Facebook. But this time the victim wasn’t just individuals. It was democracy.

Two weeks after the New York Times and the Observer of London unearthed the Cambridge Analytica scandal, Swedish researchers exposed another case of disturbing data migration: Grindr, the gay dating app, had disclosed users’ HIV status to a pair of outside companies. While far fewer people were exposed than those caught up in the Facebook breach, the intimacy of the information—and its potential for misuse—alarmed civil libertarians.

Both of those cases involved data that users gave voluntarily to social media companies, based on trust. But that tacit agreement doesn’t always happen in an era where people shed data like skin cells. (In the case of DNA data-banking, that process can be quite literal.)

In some situations, tech companies have given away very intimate information, details users never imagined would be shared. In 2017, for example, police used an Ohio man’s pacemaker data to charge him with burning down his own house to collect on the insurance—a literal case of the tell-tale heart.

Since smart speakers’ microphones are always turned on, privacy advocates worry about them becoming wiretaps for law enforcement. That sounds alarmist until you look back at 2006, when federal agents got permission to use a cellphone as a “roving bug.” What would prevent them from making a similar request involving an Amazon Echo or any other smart device with a microphone or sensors?

While investigating a 2015 murder in a hot tub, the police department of Bentonville, Arkansas, served Amazon with a search warrant for recordings from a nearby Echo.

The spread of networked devices—the so-called internet of things—could someday give police easy access to the most private parts of our lives. Law enforcement already has a formidable array of surveillance technologies, ranging from license plate readers to the cell site simulators nicknamed “stingrays” that mimic mobile phone towers to facial recognition and access to credit card transactions—an area of data that is mushrooming as some areas of the country move towards a cashless economy.

Meanwhile, Amazon has quietly been licensing its own facial recognition software, called Rekognition, to law enforcement agencies. In November 2018, alarmed members of Congress wrote a letter to Jeff Bezos, demanding to know more about how Rekognition was being used. Weeks later, a new Amazon patent application went public. It described a neighborhood surveillance system, made up of networked doorbell cameras that recognize “suspicious” people and call the police.

Alexa has already had a few brushes with the law. While investigating a 2015 murder in a hot tub, the police department of Bentonville, Arkansas, served Amazon with a search warrant for recordings from a nearby Echo. Reporters at The Information broke the news, calling it “what may be the first case of its kind.” The suspect agreed to release the recordings and was later exonerated. Three years later, a New Hampshire judge made a similar demand, ordering Amazon to release recordings from an Echo sitting in the kitchen where two women were murdered.

Exercise tracker data—which can include users’ heart rates, locations, and distances traveled—is showing up in courtrooms as evidence related to charges of sexual assault, personal injury, and homicide. In a Connecticut murder case, prosecutors obtained the victim’s FitBit records to build a case against her husband, who claimed a masked intruder had shot her when the device showed she was still walking around.

The internet of things is a gold mine for police. Researchers are working to expand its applications for law enforcement. At Champlain University in Vermont, graduate students dedicated a semester to “Internet of Things Forensics,” studying the Nest thermostat and other devices to see how they could help criminal investigations. A program description praised the “diversity and usefulness” of networked objects—ranging from “routers that connect a laptop to the internet” to “a crockpot (from WEMO) and slippers (from 24eight).”

__________________________________

From Snowden’s Box. Used with the permission of the publisher, Verso Books. Copyright © 2020 by Jessica Bruder and Dale Maharidge.

Jessica Bruder and Dale Maharidge

Jessica Bruder is the author of Nomadland, which was named a New York Times Notable Book and Editors’ Choice and a finalist for the J. Anthony Lukas Prize and the Helen Bernstein Book Award. It was adapted for a film starring Frances McDormand and David Straithairn, due for release from Fox Searchlight in 2020. She is also the author of Burning Book and writes for WIRED, New York Magazine, Harper’s Magazine, The Nation, The New York Times and The Guardian, among other publications.

Dale Maharidge is the author of ten books including Bringing Mulligan Home: The Long Search for a Lost Marine, which was the genesis for his recent podcast The Dead Drink First. He won the non-fiction Pulitzer Prize in 1990 for And Their Children After Them. He has been a Nieman Fellow at Harvard and held residencies at Yaddo and MacDowell. He’s currently working on his novel Burn Coast, due out in 2021. He is a professor at Columbia Journalism School and lives in New York