Breaking Good: Why Artists Remake, Experiment, and Smash Tradition

On Remodeling Not Only the Imperfect, but the Beloved

The LEGO Movie (2014) immerses viewers in a world built entirely of colorful toy bricks: not just the buildings but also the people, sky, clouds, sea, even the wind. The hero, a figurine named Emmet, tries to stop the evil Lord Business from freezing the world with Kragle, a mysterious and powerful substance. The only way to stop Lord Business is to find the Piece of Resistance, a mythic brick that neutralizes Kragle. All around the LEGO world, fellow figurines sing the anthem “Everything is Awesome” as Emmet struggles to convince them of their impending doom.

Midway through, the film takes an unexpected turn into live action: the LEGO universe turns out to exist in the imagination of a young boy named Finn. In reality, Lord Business is Finn’s father, known as the Man Upstairs. He has built an elaborate city out of LEGO bricks in the family basement, with skyscrapers, boulevards, and an elevated train. Upset with his son for disturbing it, the Man Upstairs is planning to glue all of the pieces permanently in place with Krazy Glue. The Piece of Resistance turns out to be the cap to the Krazy Glue. The Man Upstairs’ LEGO city is the result of countless hours of effort. It’s beautiful, even perfect. But the audience naturally sides with Finn’s desire to keep building and rebuilding it, rather than the plan to freeze the world’s progress.

Thanks to the restlessness of human brains, we don’t just set out to improve imperfection—we also tamper with things that seem perfect. Humans don’t just break bad; we also break what’s good. Different creators may admire or scorn the past, but they share a characteristic: they don’t want to glue down the pieces. As novelist W. Somerset Maugham put it, “Tradition is a guide and not a jailer.” The past may be revered, but it is not untouchable. As we’ve seen, creativity does not emerge out of thin air: we depend on culture to provide a storehouse of raw materials. And in the same way that a master chef shops for the finest ingredients in preparing a new recipe, we often search for the best of what we’ve inherited in order to make something new.

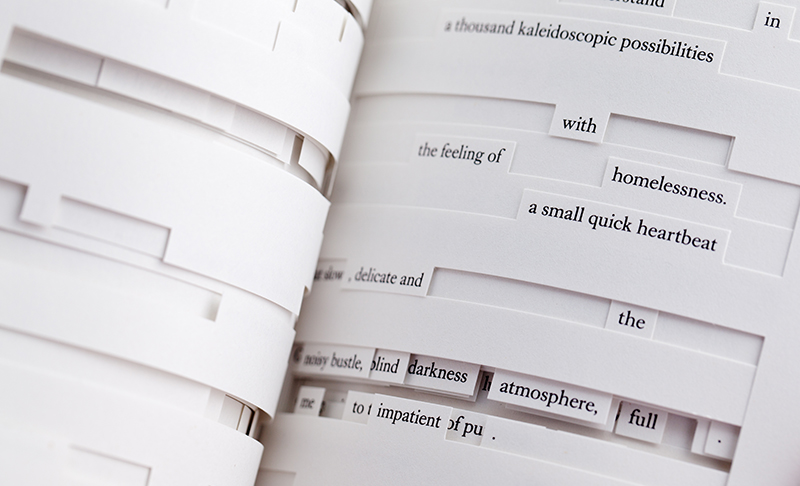

In 1941, the Nazis moved Polish Jews to the Drohobycz Ghetto as a final stop before sending them to their deaths in concentration camps. Among the condemned was an eminently talented writer named Bruno Schulz. Although Schulz was temporarily saved from deportation by a Nazi officer who admired his work, another officer gunned him down in the street. Very little of Schulz’ writing survived the war. Among his only published books was a collection of short stories, The Street of Crocodiles. Over the years the book grew in renown and, a couple of generations later, American writer Jonathan Safran Foer paid tribute to it. But rather than preserving or imitating it, he used die-cutting technology to cut away portions of Schulz’s text, turning it into something like a prose sculpture. Foer didn’t pick apart something he didn’t like—instead, he chose something he loved. He showed his admiration for Schulz’ work by remaking it into something new. Like Finn, he broke good.

A Page from Jonathan Safran Foer’s Tree of Codes.

A Page from Jonathan Safran Foer’s Tree of Codes.

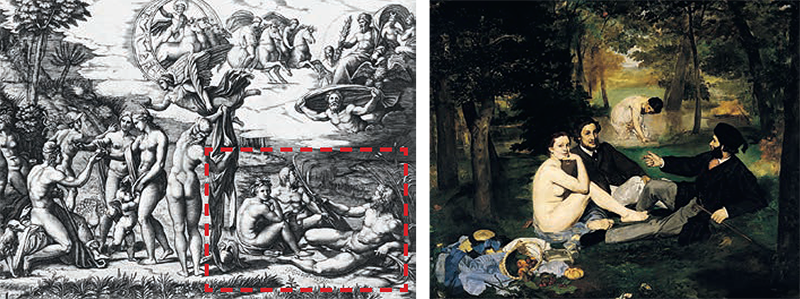

Generation after generation, we reassemble the bricks of history. Edouard Manet broke good to create his 1863 painting Le Déjeuner sur l’herbe. Using the 15th-century engraving The Judgment of Paris by Raimondi as a starting point, Manet transformed the three mythological figures in the lower right-hand corner into two bourgeois gentlemen and a prostitute lounging in a Parisian park.

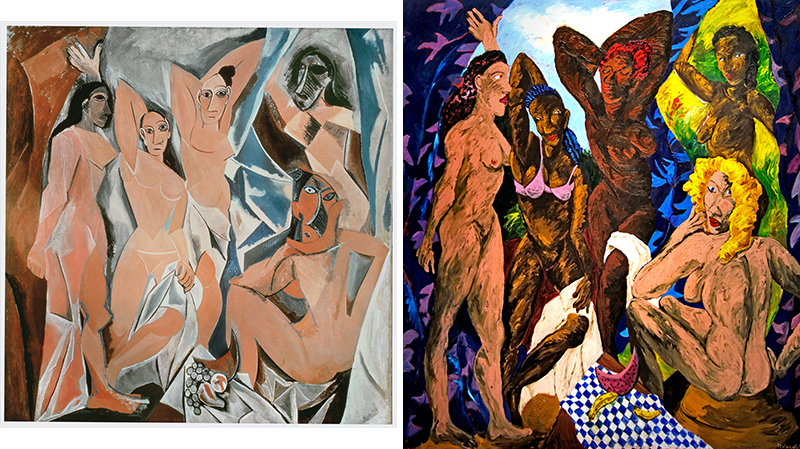

Later, Picasso broke Manet’s good when he created his version of the painting, to which he gave the same name.

And later, Robert Colescott remodeled Picasso’s iconic Les Demoiselles d’Avignon into his Les Demoiselles d’Alabama.

Occasionally, societies try to freeze conventions in place. During the 19th century, the French Art Academy set the standards for visual art. It set out to prescribe the public’s taste and what was considered appropriate to buy. The Academy’s tent was large enough to include great painters of contrasting styles, from classicists to leaders of the Romantic movement. But, like the Man Upstairs, over time the Academy began to cement things into place.

Every two years, the Academy hosted an art salon, the country’s major forum for the latest work. If you wanted to make your mark in the French art world, this was the place to do it. The salon was always highly selective, but by 1863 the jury’s taste had grown excessively narrow: they turned away thousands of canvases, including many by established painters. Manet’s Le Déjeuner sur l’herbe was among the reject pile. The jury was scandalized by its blatant sexuality and seemingly haphazard brushwork.

Previously, artists whose work was excluded could do little better than accept their fate. But this time, too many artists had broken the Academy’s “good.” There were so many rejected paintings that the artists rose up in protest. The outcry was so intense that Emperor Napoleon III visited the exhibition hall to view the rejected works. He ordered a Salon des Réfusés—a salon of rejects—to be opened near the main show so the public could judge for itself. More than 400 artists signed up. The Academy put little effort into making the Salon de Réfusés presentable: canvases were arranged helter-skelter and were poorly marked; no catalogue was published. Compared to the main salon, it looked like a yard sale. Despite that, the exhibition of rejected paintings was a turning point in the history of Western art. It marked a move away from mythological and historical subjects to more contemporary subject matter. Painstaking brushwork shifted to more experimental painting techniques. Thousands of people crowded into the cramped galleries, their eyes opened to works the Academy had hoped they would never see. The need to shake up tradition had triumphed over efforts to clamp it down.

Human brains continually remodel the pieces in front of them, and this urge drives science as much as art. Geologists in the early 20th century, for example, believed that the continents had never moved. As far as they were concerned, an atlas of the Earth would look the same today as at any time in its history; the Earth’s stability was not open to question. Given the data available at the time, this was a solid argument grounded in field observations.

But in 1911, Alfred Wegener read a paper that described identical plants and animals found on opposite sides of the Atlantic. Scientists at the time explained this by postulating that land bridges, now sunken, had once connected the two shores. But Wegener couldn’t stop thinking about the fact that the coastlines of Africa and South America fit together like a jigsaw puzzle. He then found unexpected correspondences between rock strata in South Africa and Brazil. Running a mental simulation, Wegener fit the seven continents together into a single landmass, which he named Pangaea. He postulated that this super-continent must have split apart hundreds of millions of years ago, its massive chunks gradually sliding away from each other. Wegener’s mental blend enabled him to “see” our planet’s history in a way others had not: he had discovered continental drift.

Wegener presented his hypothesis in a 1912 paper, and his book The Origins of Continents and Oceans came out three years later. Just as Darwin had proposed that species evolve, Wegener asserted that our planet changes over time. Wegner’s theory snapped the continents from their moorings and allowed them to float like lily pads. The fact that his model ran counter to the prevailing wisdom didn’t worry Wegener. He wrote to his father-in-law, “Why should we hesitate to toss the old views overboard? . . . I don’t think the old ideas will survive another ten years.”

Unfortunately, Wegener’s optimism was misplaced. His work was widely greeted with disdain and ridicule: to his scientific peers, it was “heretical” and “absurd.” The paleontologist Hermann von Ihering quipped that Wegener’s hypothesis would “pop like a soap-bubble.” The geologist Max Semper wrote that proof of “the reality of continental drift is undertaken with inadequate means and fails totally.” Semper went on to suggest that it would be advisable for Wegener “not to honor geology with his presence any longer, but to look for other fields that have so far neglected to write above the door ‘Holy Saint Florian, spare this house.’” Wegener faced several daunting problems. Most Earth scientists were fieldworkers, not theoreticians. For them, everything was about the data they measured and held in their hands. Wegener was short on physical evidence. He could only point to circumstantial indications that the continents were once joined; it was impossible to turn back the clock hundreds of millions of years to offer direct proof. Even worse, he could only speculate about how the Earth’s plates moved. What was the geological engine that powered these seismic shifts? To his peers, Wegener had put the cart before the horse, proffering a theory with insufficient facts: his hypothesis was too much a work of imagination.

In an attempt to persuade his contemporaries, Wegener undertook several dangerous northern expeditions to measure the motion of the continents. He didn’t make it back from his final trek. Lost in freezing temperatures en route to a base station, Wegener died of a heart attack in November 1930. The location was so remote that his body was not recovered until several months later.

Within several years, a combination of new measuring devices gave rise to a flood of data about the ocean floor, magnetic fields and dating techniques. The results forced geologists to reconsider Wegener’s discarded theory. With some hesitation, geologist Charles Longwell wrote, “The Wegener hypothesis has been so stimulating and has such fundamental implications in geology as to merit respectful and sympathetic interest from every geologist. Some striking arguments in his favor have been advanced, and it would be foolhardy indeed to reject any concept that offers a possible key to the solution of profound problems in the Earth’s history.” A few decades later, the geologist John Tuzo Wilson—who had initially scorned Wegener’s theory—changed his mind. “The vision is not what any of us had expected from our limited peeps . . . The earth, instead of appearing as an inert statue, is a living, mobile thing . . . It is a major scientific revolution in our own time.”

Continental drift was embraced by the same crowd that had derided it earlier. Wegener’s urge to challenge the status quo—to unglue the continental pieces—had been vindicated.

Creative people often break their culture’s tradition, and they even buck their own. In the 1950s, the painter Philip Guston was a young star of the New York school of Abstract Expressionists, producing cloud-like fields of color.

Philip Guston’s To B.W.T. (1950) and Painting (1954)

Philip Guston’s To B.W.T. (1950) and Painting (1954)

After several major retrospectives of his work in the early 1960s, Guston took a hiatus from painting, left the New York City art scene behind and moved to a secluded home in Woodstock, New York. He re-emerged several years later. In 1970, an exhibit of his newest work opened at the Marlborough Gallery in New York City. It caught his admirers by surprise: Guston had turned to figurative art. His trademark color palette of red, pink, grey, and black was still there, but now he was painting grotesque, often misshapen images of Ku Klux Klan members, cigarettes, and shoes.

Philip Guston’s Riding Around (1969) and Flatlands 1970

Philip Guston’s Riding Around (1969) and Flatlands 1970

The reaction was almost unanimously hostile. In a New York Times review, art critic Hilton Kramer called the work “clumsy” and said Guston was acting like a “great big lovable dope.” Time magazine critic Robert Hughes was equally dismissive. About the Ku Klux Klan motif, Hughes wrote, “As political statement, [Guston’s canvases] are all as simple-minded as the bigotry they denounce.” Amid all the negative publicity, the Marlborough Gallery did not renew the artist’s contract. By breaking his own good, Guston had disappointed many of his most ardent admirers. But he stood by his decision and painted representational art until his death in 1980.

Hilton Kramer never wavered in his opinion. But others did. In 1981, Hughes published a reassessment:

The paintings Guston began to make in the late 60s, and first showed in 1970, looked so unlike his established work that they seemed a willful and even crass about-face . . . If anyone had suggested in those days that the figurative Gustons would exert a pervasive influence on American art ten years later, the idea would have seemed incredible.

Yet it may have turned out that way. In the intervening decade, there has been a riotous growth of deliberately clumsy, punkish figurative painting in America: paintings that ignore decorum or precision in the interest of a cunningly rude, expressionist-based diction. Quite clearly, Guston is godfather to this manner, and for this reason his work excited more interest among painters under 35 than any of his contemporaries.

Even more than Philip Guston, by the end of the 1960s the Beatles had achieved a huge level of expertise and fame, theirs in the world of music. But even as they produced hit after hit, the band continued to experiment. Their creative efforts reached a peak in the White Album, released in 1968. It grew out of the band’s stay at an Indian ashram and John Lennon’s love affair with the avant-garde artist Yoko Ono. The last track, Revolution 9, is made of up of a collage of repeating loops, each revolving at its own speed, including snippets of classical music being played backward, clips of Arabic music, and producer George Martin saying “Geoff, put the red light on.” Where did the title come from?

Lennon recorded a sound engineer saying “This is EMI test number nine” and then spliced out the words “number nine,” replaying it over and over. As he later told Rolling Stone magazine, it was “my birthday and my lucky number.” As the longest track on the album, the track sent the message that the band that had broken the traditions of 1950s pop music had also seen fit to break their own traditions. As one music critic put it, “For eight minutes of an album officially titled The Beatles, there were no Beatles.”

The creative destruction of one’s own structures happens not only in art, but in science as well. As one of the world’s preeminent evolutionary biologists, E.O. Wilson had spent decades investigating a puzzle of nature: altruism. If an animal’s defining goal is to pass its genes on to the next generation, what reason could it have to risk its life for another? Darwin’s solution was kin selection: animals behave selflessly to protect their biological relatives. With Wilson taking the lead, evolutionary scientists coalesced around the view that the greater the number of genes in common, the greater the likelihood of kin selection.

But Wilson was not ready to glue down the pieces. After 50 years of championing kin selection, he reversed his position. He began to argue that new data contradicted the established model. Some insect colonies made of close relatives showed no altruism, and other colonies with more diverse gene pools behaved far more selflessly. Wilson developed a new view: there are environments that require teamwork for survival, and in those cases a penchant toward cooperation becomes genetically favored. In other situations, when teamwork doesn’t yield an advantage, animals will look out for themselves, even at the expense of their relatives.

Reaction to Wilson’s paper was fierce. Many leading biologists argued that he had lost his way and that his paper should not even have been published. In a book review titled “The Descent of Edward Wilson,” Richard Dawkins, one of Wilson’s most prestigious peers, was unsparing in his criticism: “I’m reminded of the old Punch cartoon where a mother beams down on a military parade and proudly exclaims, ‘There’s my boy, he’s the only one in step.’ Is Wilson the only evolutionary biologist in step?”

But being out of step with his colleagues didn’t bother Wilson. Others were amazed that such a venerated figure, the winner of two Pulitzer Prizes, would put his standing in the field in jeopardy. But Wilson, a practiced innovator, was not afraid to radically change his views to match where the science led him—even if it meant overturning his own legacy. The jury is still out on the veracity of Wilson’s proposal (it may turn out to be incorrect), but, right or wrong, there are no pieces that he considers glued into place.

*

Humankind constantly renews itself by breaking good: rotary phones turn into push button phones, which turn into brick-like cellphones, then flip-phones, then smartphones. Televisions get larger and thinner—and wireless and curved and in 3D. Even as innovations enter the cultural bloodstream, our thirst for the new is never quenched. But perhaps there are some achievements that reach such a state of perfection that later minds agree to keep their hands off? For such a creation, one might look no further than a Stradivarius violin. After all, the goal of a violinmaker is to create an instrument with the ability to project a beautiful, rich tone all the way to the back of a concert hall, while also being comfortable to play. In the hands of the Italian maker Antonio Stradivari (1644 – 1737), the proportions, choice of woods and even proprietary varnish reached its peak. More than three hundred years later, his instruments remain the most coveted on the market. Stradivarius violins can fetch more than $15 million at auction. So it seems unlikely that anyone would try to improve on a Strad, the zenith instrument of its kind.

But the innovative human brain simply doesn’t understand the concept of leaving well enough alone. Drawing on contemporary research into acoustics, ergonomics and synthetic materials, modern violinmakers have explored making violins lighter, louder, easier to hold and more durable. Consider Luis Leguia and Steve Clark’s violin, built from composite carbon-fiber materials. In addition to being lightweight, it’s not affected by changes in humidity—an unhappy trait of wood instruments, which develop cracks.

The “Lady Blunt” Stradivarius and Legula and Clark’s carbon-fiber violin

The “Lady Blunt” Stradivarius and Legula and Clark’s carbon-fiber violin

During an international violin competition in 2012, professional violinists were asked to play and rate several instruments, old and new. The twist was that the musicians wore goggles so they could not see which instrument they were playing, and perfumes were used to mask the distinctive smells of the old violins.

Only one-third of the participants ranked the old instruments as winners. Two Strads were used, and the more famous one was the least often chosen. The test called into question the notion that a Strad represents a standard that can never be surpassed.

It may not be easy to unseat a Stradivarius as the ultimate object of desire—but incremental advances are leading to a modern violin that is more powerful, less vulnerable to wear-and-tear, and less expensive than its illustrious predecessor. When a soloist takes the stage with a synthetic instrument and sings out the soaring melodies of the Beethoven Violin Concerto, it does not seem so far fetched to break something as “perfect” as a Strad.

*

No one wants to live the same day over and over again. Even if it were the happiest day of your life, events would lose their impact.

Happiness would wear off because of repetition suppression. As a result, we continually alter what is already working. Without that urge, our most delicious experiences would be rendered flavorless by routine.

It’s easy to be intimidated by the giants of the past, but they are the springboards of the present. The brain remodels not only the imperfect, but the beloved. Just as Finn undoes the handiwork of the Man Upstairs, we too are obligated to put the state-of-the-art back onto the workshop table.

__________________________________

From The Runaway Species. Used with permission of Catapult. Copyright 2017 by Anthony Brandt and David Eagleman.

Anthony Brandt and David Eagleman

Anthony Brandt is a composer and professor at Rice University’s Shepherd School of Music. He is also Artistic Director of the contemporary music ensemble Musiqa, winner of two Adventurous Programming Awards from Chamber Music America and ASCAP.

David Eagleman is a neuroscientist and the New York Times bestselling author of Incognito: The Secret Lives of the Brain and Sum. He is the writer and host of the Emmy-nominated PBS television series The Brain, an adjunct professor at Stanford University, a Guggenheim fellow, and the director of the Center for Science and Law.