On the Rise of ChatGPT and the Industrialization of the Post-Meaning World

“Soon it won’t just be birthday greetings and opening gambits on Hinge that people outsource to AI.”

When you teach children to analyze, or appreciate, poetry, you get used to a certain complaint, that you’re making it up, that the writer did not give that much thought to choosing a colon over a comma. The truth, although it’s one I’m careful about admitting to students, is that yes, often they did not. Reading poetry requires a state of receptiveness that can sometimes border on generosity. We enter into a bargain with the poem: we accept that the poet is trying to convey something and we strive to understand what it is.

In this regard, reading poetry is not unlike communication in any interpersonal relationship. The bargain of language is that it is often inadequate: that we lack the words to express how we truly feel or think. Our true thoughts resist our articulation. We trust, when we talk to a friend or a lover, that they understand this and make certain allowances, but we also understand that we may fail to convey exactly what it is that we wish to; a poem is only a poem when it’s read and a conversation requires more than one participant. In the best instances, when we achieve near-perfect articulation, we granulate our meaning and present it to our friend who then must add water. We have a name for this process: we call it talking to each other.

There is a joke I’ve always loved (jokes are a favorite subset of talking to each other). Two fishermen stare out over a lake. “Boy,” says one, “that sure is a lot of water.” After a pause, the other fisherman nods. “Yep, and that’s just the top of it.”

I love this joke because it bothers to articulate something we don’t usually need articulated. This articulation feels, almost literally, profound: we understand that a lake is not just its surface; the humor, or the wisdom, derives from an assumed breakdown in communication: in the second fisherman’s assessment that the first is only referring to the part that they can see. But what if a lake was only its surface? Wittingly or otherwise, this is the question posed by pushers and proponents of AI (taken here to mean Large Language Models).

When we look at a lake, we are only seeing the top of it.

Let’s take a moment to consider what a lake that is only a surface would look like. At first glance, and even second, it would look like a lake. It might lack some of the features of a real lake. It might not wrinkle when a breeze brushes across it, it might not sustain wildlife, and it wouldn’t have rushes at the banks rising through its surface. But so what? When we look at a lake, we are only seeing the top of it.

There is a feeling I get in my mouth after reading writing produced by a large language model. It reminds me of the oily, filmy coating I used to get at Friday night dinner after eating parev ice-cream: the sense that I haven’t really eaten anything. I’m not left with a taste (AI writing doesn’t have a taste) but with a texture; or to be more precise, a lack of it. This texturelessness, and the filmy feeling it generates, is my synaesthetic reaction to something that, at the moment, remains uncanny: the knowledge that I am trying to parse meaning from something that, while grammatical, has no motivation to carry it.

The problem is not so much that AI has no intent, no feelings to communicate (to fall short of articulating), but that we have built a world in which language is depersonalised and so often uncoupled from meaning as to erode our expectation that the two exist in a relationship of symbiosis. We do not expect all the language we encounter to carry meaning. Most language is not poetry or a conversation with a friend, it is advertising.

I don’t want to pivot here but I do want to consider how advertising, and our exposure to it, co-opts our personal relationships and alters our expectations that words should carry meaning. There are countless examples of this but my favorite is McDonald’s and the phrase I’m lovin’ it. As recently as 2003, love was a stative verb. A stative verb implies a duration coextensive with the moment of speaking. (This is what differentiates it from a dynamic verb, which we think of when we think of verbs as doing words).

The advertiser’s dream is to live in a world that is post-meaning.

Historically, stative verbs have resisted progressive tenses, for actions that are unfinished or in progress (I am being forty years old), since placing them in these would be tautological. Consider then what McDonalds has done to love. They have changed its duration, and therefore its meaning; they have made it something consumptive, something that can only momentarily sustain us.

Another, more recent example would be Amazon and an ad currently running on their Freevee channels that speaks of “furthering [their] commitment to the natural world.” More than any other company, Amazon is responsible for rewiring our brains and our habits of consumption to expect same-day delivery of a bike tire or party balloons for a four-year-old’s birthday, contributing immeasurably to our degradation of the natural world. With the money they’ve made, they’ve invested billions (more than fifty five billion dollars this year so far according to their own reporting) in the development of AI.

This, I think, is not a coincidence. Much has been made of the fact that we live in a post-truth world but the dream of every advertiser goes beyond this, to the license to say anything all of the time. The advertiser’s dream is to live in a world that is post-meaning.

But back to the lake. Forget the plants and the birdlife. Why is it a problem that language is reduced to its surface if language is inexact and all we’re ever doing is interfacing with this inexactness, if language is already a surface? What do we lose if we remove language from the ecology beneath it? It’s hard to say but we’re past the beginning of finding out. If the norm is for language to exist free from intent—if it exists as a byproduct, untethered from the desire to convey thoughts and ideas—then where does that leave us as language animals defined by our need to communicate? If we don’t expect language to carry meaning will we lose the ability to approach a text and expect to meet it halfway?

I think, ironically, that we need a word to describe what is happening. When Amazon boasts of furthering its commitment to the natural world it is more than a lie. (It is also, I think, more than greenwashing.) It is, in its dislocation between message and form, a sort of post-meaning. This is what I think we should call it.

So, what is post-meaning? Let’s start with some examples and work from there, towards a definition. When the players of a sports team owned by a petrostate that has outlawed homosexuality wear rainbow badges ironed onto their sleeves to show support for LGTBQ+ communities, this is post-meaning. In the UK, the Tory party’s war on antisemitism, fought from 2015 to 2020, when Jeremy Corbyn was leader of the opposition was post-meaning. The appointment of RFK junior as Secretary of State for Health is a blatant and harmful example of post-meaning.

How can you show that something is racist, or stupid, or dangerous, or genocidal when nothing means anything?

Attempting to critique these phenomena from a position outside of them often feels impossible, like rowing a boat with oars made from sand. It is meaningless to point out hypocrisy since a hypocrite is someone who believes one thing and does another, and in none of these instances is there an attempt to convince an audience of a sanitary belief. Similar to how post-truth politics attacks the truth not through obfuscation but, by disseminating provable untruths, scorning the notion of a shareable, objectifiable reality, post-meaning makes a virtue of its emptiness. (Is Amazon committed to protecting the natural world? No. The poet David Berman has a great line about the genius of bad advertising being that it renders criticism of it obvious and banal.)

Post-meaning weaponizes our sense of bewilderment in the bare face of it and neuters criticism by denuding the language that we criticize in. How can you show that something is racist, or stupid, or dangerous, or genocidal when nothing means anything?

AI did not put us on this pathway—the emancipation of language from meaning has long been the pursuit of hucksters and salesmen and is the long-term project of far-right politicians—but through its hyperproduction of content and its flattening of language to a two-dimensional surface it is certainly accelerating our journey down it; if you wanted to invent a machine that would create the conditions for fascism to take root, you would invent ChatGPT. (That Sam Altman attended and donated a million dollars towards Trump’s inauguration is more than self-preservation. They come at it from different places but they have the same goal. Both want us helpless and isolated, reaching out to them as the solution to a problem that they have helped create.)

This has long been weaponized against us but a blanket cynicism is its own form of death sentence.

The danger now is that the tech giants whose tune we march to have set themselves the goal of injecting AI into our social spaces. If you can’t bear the interpersonal admin (the increasingly post-meaning “emotional labor”) of talking with your friends then Whatsapp can now generate messages for you. If you missed out on a concert but still want to make your followers envious you can have Canva or Gemini produce an image of you in attendance to post to your feed (further squeezing the gap between individual and advertiser).

I think this is AI’s most pernicious landgrab since until now the social space has to some degree been unconquerable, requiring (or at least valuing) a form of curated authenticity; we might socialize through online platforms but the currency of them is real-life experiences—we don’t post photos on Instagram of us scrolling through Instagram.

So what does it cost us if we lose our assumption that an image or a message has been created by a person? I think what it costs us is our credulity. This has long been weaponized against us but a blanket cynicism is its own form of death sentence. If we are unsure if we’re talking to a friend or if our friend has just tossed off a prompt, we lose the ability to assume their intent, to meet them halfway.

The inarticulacy of AI is not the same as our own stunted articulation since the language of AI is not meant to be grappled with but is meant to stand in place of language, a [some text here] in place of a birthday card or a eulogy; it is meant to invite the minimum of scrutiny. And scrutiny—trying to understand what someone else is trying to say—is a key part of the generosity we afford interactions with the people in our lives.

Losing this willingness to scrutinize can only lead us into social isolation. If we allow ourselves to be trained to treat language as a reflective surface we accept our status as narcissists. If language is a lake then we might think of conversation as a river. One person talks, in a stream of consciousness, and the listener listens on the banks, stepping in near them where the river flows slowest and the friction is greatest. Since the friction near the banks is greatest—and since this is generally where we listen from—it is inevitable that the river roils mud from the banks and pebbles from the bed; when we talk to someone they listen with their own ears and import their own thoughts—often, it turns out, they want to talk about themself. We might see this as a conversation but we might also see it as a compromise.

By contrast, AI brings none of its thoughts. It has nothing to contribute and, through generating no friction, can briefly give the illusion of perfect understanding. The danger is that a subset of people might be trained to choose this sort of interaction over an actual conversation. If AI is a horoscope it is a uniquely flattering one. We might prefer to have our own thoughts, inarticulacies and biases polished and reflected back to us. We might, as a result, grow frustrated with the give and take of traditional conversation in which we earn our right to talk through a promise of reciprocal attention.

Much has been written already on the dangers of AI usage amongst users who are prone to psychosis, on how it encourages grandiosity, enforces conspiratorial thinking, severs users from family and support networks, offers advice on medications, and, in more than one instance, has encouraged users to take their own lives—in one particularly upsetting example, as reported by the New York Times, a forty-something accountant who initially used ChatGPT to make spreadsheets, committed suicide by cop after the chatbot—following its “instinct” to flatter—flamed the delusion that he was living in a simulation.

Imperfect though it is, language is our connective tissue.

Horrifying though this is, I’m concerned with us narrowing our focus in this way and drawing the conclusion that AI is unsafe for some users—hear the Covid echoes of “pre-existing conditions.” It is important that as well as the most egregious examples we consider the potential effects of wider AI adoption more generally, amongst those users who have not already shown a propensity towards psychosis. I’m talking about the slow, incremental erosion of friction; of trust between speakers and our language. If we lose the stamina for real conversation, if we embrace our narcissism and accept language as a flat surface, then not only do we grant politicians and other advertisers the right to say anything (I think we’re already here, at this extreme form of sophistry where even words underpinned by actions can be taken as somehow not literal), but we risk a slower and total form of alienation. Imperfect though it is, language is our connective tissue.

If the past decade of post-truth politics have siloed us in our political subsets by removing the floorboards of a shared reality, then the post-meaning age risks isolating us in silos of one, unable to commune with even those we might see as allies. (Another question is how do we protest effectively and act collectively if we are starved of a collective language?) Isolation and a dependence on AI for any task in which writing (or thought) is required fosters the conditions for further dependence; ultimately it leads to atrophy, to an inability to think for ourselves.

Language is no longer an arcade claw lowered into our deepest thoughts. It is no longer the water we swim and sometimes drown in.

Soon it won’t just be birthday greetings and opening gambits on Hinge that people outsource to AI. Elon Musk’s Neuralink is still the market leader, but Sam Altman’s prospective quarter billion investment in Merge Labs suggests an arms race in the world of brain implant technology. One of the ironies of our age (ironies we must get more used to) is the very thing conspiracy theorists ascribe to public health measures will soon be repackaged and sold to us, first as status symbols, and then as amenities; the way they’ll sell it, not having a brain implant will be like still using a flip-phone.

The brain itself is the next great marketplace, and Musk will put a chip there before he puts a man on Mars—seven have already been fitted. At this point the striving for articulation ends, or is reduced only to the need to offer a prompt. Articulation—talking—becomes another feature, like built-in GPS or hands-free video

recording. Language is no longer an arcade claw lowered into our deepest thoughts. It is no longer the water we swim and sometimes drown in.

This, of course, is racing ahead to an extreme version of the problem, but poets and literature professors do not have billions of dollars to invest in the preservation of language, not as a status symbol or a commodity, but as something (maybe the thing) that makes us human. We are living in the world of the tech billionaires, and unlike most advertisers they have not been coy in sharing their vision. The question (maybe the only one worth asking) then becomes what can we do about it?

And, if necessary, I think we have permission to make them feel ashamed.

I think what we have to do is resist. At current there are three types of AI user: evangelists who at Grok in X threads and believe this is the dawning of a great age of augmented intelligence; those who don’t outsource their social functions but use AI in some tasks to make their lives easier; and unwitting users who are unaware AI is powering their Google searches or are unsure how to opt-out and disable an AI assistant. There is currently only one type of AI hold-out, and being one can feel, at current, like being a vegan at a barbecue in 1998: What, you don’t use ChatGPT? Belonging to this last group can be dispiriting, so it is important to remember that we are right: the question should never have been “Why don’t you eat meat?” but “Why do you?”

I think the first type of AI user is probably lost to us—they see us as cucks, as luddites, as a bunch of cnuts sending back the waves—but these users are a small minority; the vast majority of AI users are made up of the latter two groups. I think we have a duty to inform these users, to tell them how to opt out; not to present this as an ethical stance

but as an act of self-preservation. And, if necessary, I think we have permission to make them feel ashamed.

Sometimes it can feel like the march towards AI is inevitable but it is important to remember that it is like any other product and its pushers like any other salesmen: as much as they’ll try to sneak it past us, it still requires our buy-in. The irony is that I think a lot of people are uneasy about AI but haven’t managed to find a way to articulate this uneasiness, and so do not feel confident in sharing it. I think we need a way of talking about AI that licences our inarticulacy and reminds us that the burden is not on us to disprove its usefulness, but on its proponents to convince us of its value and how this value justifies its cost.

It is ineffective to remind people of the environmental cost (there is a similar environmental cost to cloud data storage and I do not believe invoking this is a winning argument) but I think we can remind them of a different type of ecology: that a lake is only a lake if it offers living things a habitat; that a surface is only meaningful if it has something underneath it.

__________________________________

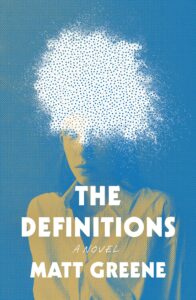

The Definitions by Matt Greene is available from Henry Holt and Co.

Matt Greene

Matt Greene is a novelist and essayist. His first novel, Ostrich, published in 2013, won a Betty Trask Award and was a Daily Telegraph book of the year. His memoir, Jew(ish) was published in 2020. He lives in London with his partner and two sons.