The term black hole is of very recent origin. It was coined in 1969 by the American scientist John Wheeler as a graphic description of an idea that goes back at least two hundred years, to a time when there were two theories about light: one, which Newton favored, was that it was composed of particles; the other was that it was made of waves. We now know that really both theories are correct. By the wave/particle duality of quantum mechanics, light can be regarded as both a wave and a particle. Under the theory that light is made up of waves, it was not clear how it would respond to gravity. But if light is composed of particles, one might expect them to be affected by gravity in the same way that cannonballs, rockets, and planets are. At first people thought that particles of light traveled infinitely fast, so gravity would not have been able to slow them down, but the discovery by Roemer that light travels at a finite speed meant that gravity might have an important effect.

On this assumption, a Cambridge don, John Michell, wrote a paper in 1783 in the Philosophical Transactions of the Royal Society of London in which he pointed out that a star that was sufficiently massive and compact would have such a strong gravitational field that light could not escape: any light emitted from the surface of the star would be dragged back by the star’s gravitational attraction before it could get very far. Michell suggested that there might be a large number of stars like this. Although we would not be able to see them because the light from them would not reach us, we would still feel their gravitational attraction. Such objects are what we now call black holes, because that is what they are: black voids in space. A similar suggestion was made a few years later by the French scientist the Marquis de Laplace, apparently independently of Michell. Interestingly enough, Laplace included it in only the first and second editions of his book The System of the World, and left it out of later editions; perhaps he decided that it was a crazy idea. (Also, the particle theory of light went out of favor during the nineteenth century; it seemed that everything could be explained by the wave theory, and according to the wave theory, it was not clear “that light would be affected by gravity at all.)

In fact, it is not really consistent to treat light like cannonballs in Newton’s theory of gravity because the speed of light is fixed. (A cannonball fired upward from the earth will be slowed down by gravity and will eventually stop and fall back; a photon, however, must continue upward at a constant speed. How then can Newtonian gravity affect light?) A consistent theory of how gravity affects light did not come along until Einstein proposed general relativity in 1915. And even then it was a long time before the implications of the theory for massive stars were understood.

To understand how a black hole might be formed, we first need an understanding of the life cycle of a star. A star is formed when a large amount of gas (mostly hydrogen) starts to collapse in on itself due to its gravitational attraction. As it contracts, the atoms of the gas collide with each other more and more frequently and at greater and greater speeds-the gas heats up. Eventually, the gas will be so hot that when the hydrogen atoms collide they no longer bounce off each other, but instead coalesce to form helium. The heat released in this reaction, which is like a controlled hydrogen bomb explosion, is what makes the star shine. This additional heat also increases the pressure of the gas until it is sufficient to balance the gravitational attraction, and the gas stops contracting. It is a bit like a balloon-there is a balance between the pressure of the air inside, which is trying to make the balloon expand, and the tension in the rubber, which is trying to make the balloon smaller. Stars will remain stable like this for a long time, with heat from the nuclear reactions balancing the gravitational attraction. Eventually, however, the star will run out of its hydrogen and other nuclear fuels. Paradoxically, the more fuel a star starts off with, the sooner it runs out. This is because the more massive the star is, the hotter it needs to be to balance its gravitational attraction. And the hotter it is, the faster it will use up its fuel. Our sun has probably got enough fuel for another five thousand million years or so, but more massive stars can use up their fuel in as little as one hundred million years, much less than the age of the universe. When a star runs out of fuel, it starts to cool off and so to contract. What might happen to it then was first understood only at the end of the 1920s.

In 1928 an Indian graduate student, Subrahmanyan Chandrasekhar, set sail for England to study at Cambridge with the British astronomer Sir Arthur Eddington, an expert on general relativity. (According to some accounts, a journalist told Eddington in the early 1920s that he had heard there were only three people in the world who understood general relativity. Eddington paused, then replied, “I am trying to think who the third person is.”) During his voyage from India, Chandrasekhar worked out how big a star could be and still support itself against its own gravity after it had used up all its fuel. The idea was this: when the star becomes small, the matter particles get very near each other, and so according to the Pauli exclusion principle, they must have very different velocities. This makes them move away from each other and so tends to make the star expand. A star can therefore maintain itself at a constant radius by a balance between the attraction of gravity and the repulsion that arises from the exclusion principle, just as earlier in its life gravity was balanced by the heat.

“According to the theory of relativity, nothing can travel faster than light. Thus if light cannot escape, neither can anything else; everything is dragged back by the gravitational field.”

Chandrasekhar realized, however, that there is a limit to the repulsion that the exclusion principle can provide. The theory of relativity limits the maximum difference in the velocities of the matter particles in the star to the speed of light. This means that when the star got sufficiently dense, the repulsion caused by the exclusion principle would be less than the attraction of gravity. Chandrasekhar calculated that a cold star of more than about one and a half times the mass of the sun would not be able to support itself against its own gravity. (This mass is now known as the Chandrasekhar limit.) A similar discovery was made about the same time by the Russian scientist Lev Davidovich Landau.

This had serious implications for the ultimate fate of massive stars. If a star’s mass is less than the Chandrasekhar limit, it can eventually stop contracting and settle down to a possible final state as a “white dwarf” with a radius of a few thousand miles and a density of hundreds of tons per cubic inch. A white dwarf is supported by the exclusion principle repulsion between the electrons in its matter. We observe a large number of these white dwarf stars. One of the first to be discovered is a star that is orbiting around Sirius, the brightest star in the night sky. Landau pointed out that there was another possible final state for a star, also with a limiting mass of about one or two times the mass of the sun but much smaller even than a white dwarf. These stars would be supported by the exclusion principle repulsion between neutrons and protons, rather than between electrons. They were therefore called neutron stars. They would have a radius of only ten miles or so and a density of hundreds of millions of tons per cubic inch. At the time they were first predicted, there was no way that neutron stars could be observed. They were not actually detected until much later.

Stars with masses above the Chandrasekhar limit, on the other hand, have a big problem when they come to the end of their fuel. In some cases they may explode or manage to throw off enough matter to reduce their mass below the limit and so avoid catastrophic gravitational collapse, but it was difficult to believe that this always happened, no matter how big the star. How would it know that it had to lose weight? And even if every star managed to lose enough mass to avoid collapse, what would happen if you added more mass to a white dwarf or neutron star to take it over the limit? Would it collapse to infinite density? Eddington was shocked by that implication, and he refused to believe Chandrasekhar’s result. Eddington thought it was simply not possible that a star could collapse to a point. This was the view of most scientists: Einstein himself wrote a paper in which he claimed that stars would not shrink to zero size. The hostility of other scientists, particularly Eddington, his former teacher and the leading authority on the structure of stars, persuaded Chandrasekhar to abandon this line of work and turn instead to other problems in astronomy, such as the motion of star clusters. However, when he was awarded the Nobel Prize in 1983, it was, at least in part, for his early work on the limiting mass of cold stars.

Chandrasekhar had shown that the exclusion principle could not halt the collapse of a star more massive than the Chandrasekhar limit, but the problem of understanding what would happen to such a star, according to general relativity, was first solved by a young American, Robert Oppenheimer, in 1939. His result, however, suggested that there would be no observational consequences that could be detected by the telescopes of the day. Then World War II intervened and Oppenheimer himself became closely involved in the atom bomb project. After the war the problem of gravitational collapse was largely forgotten as most scientists became caught up in what happens on the scale of the atom and its nucleus. In the 1960s, however, interest in the large-scale problems of astronomy and cosmology was revived by a great increase in the number and range of astronomical observations brought about by the application of modern technology. Oppenheimer’s work was then rediscovered and extended by a number of people.

The picture that we now have from Oppenheimer’s work is as follows. The gravitational field of the star changes the paths of light rays in space-time from what they would have been had the star not been present. The light cones, which indicate the paths followed in space and time by flashes of light emitted from their tips, are bent slightly inward near the surface of the star. This can be seen in the bending of light from distant stars observed during an eclipse of the sun. As the star contracts, the gravitational field at its surface gets stronger and the light cones get bent inward more. This makes it more difficult for light from the star to escape, and the light appears dimmer and redder to an observer at a distance. Eventually, when the star has shrunk to a certain critical radius, the gravitational field at the surface becomes so strong that the light cones are bent inward so much that light can no longer escape. According to the theory of relativity, nothing can travel faster than light. Thus if light cannot escape, neither can anything else; everything is dragged back by the gravitational field. So one has a set of events, a region of space-time, from which it is not possible to escape to reach a distant observer. This region is what we now call a black hole. Its boundary is called the event horizon and it coincides with the paths of light rays that just fail to escape from the black hole.

*

The work that Roger Penrose and I did between 1965 and 1970 showed that, according to general relativity, there must be a singularity of infinite density and space-time curvature within a black hole. This is rather like the big bang at the beginning of time, only it would be an end of time for the collapsing body and the astronaut. At this singularity the laws of science and our ability to predict the future would break down. However, any observer who remained outside the black hole would not be affected by this failure of predictability, because neither light nor any other signal could reach him from the singularity. This remarkable fact led Roger Penrose to propose the cosmic censorship hypothesis, which might be paraphrased as “God abhors a naked singularity.” In other words, the singularities produced by gravitational collapse occur only in places, like black holes, where they are decently hidden from outside view by an event horizon. Strictly, this is what is known as the weak cosmic censorship hypothesis: it protects observers who remain outside the black hole from the consequences of the breakdown of predictability that occurs at the singularity, but it does nothing at all for the poor unfortunate astronaut who falls into the hole.

“One could well say of the event horizon what the poet Dante said of the entrance to Hell: ‘All hope abandon, ye who enter here.'”

There are some solutions of the equations of general relativity in which it is possible for our astronaut to see a naked singularity: he may be able to avoid hitting the singularity and instead fall through a “wormhole” and come out in another region of the universe. This would offer great possibilities for travel in space and time, but unfortunately it seems that these solutions may all be highly unstable; the least disturbance, such as the presence of an astronaut, may change them so that the astronaut could not see the singularity until he hit it and his time came to an end. In other words, the singularity would always lie in his future and never in his past. The strong version of the cosmic censorship hypothesis states that in a realistic solution, the singularities would always lie either entirely in the future (like the singularities of gravitational collapse) or entirely in the past (like the big bang). I strongly believe in cosmic censorship so I bet Kip Thorne and John Preskill of Cal Tech that it would always hold. I lost the bet on a technicality because examples were produced of solutions with a singularity that was visible from a long way away. So I had to pay up, which according to the terms of the bet meant I had to clothe their nakedness. But I can claim a moral victory. The naked singularities were unstable: the least disturbance would cause them either to disappear or to be hidden behind an event horizon. So they would not occur in realistic situations.

The event horizon, the boundary of the region of space-time from which it is not possible to escape, acts rather like a one-way membrane around the black hole: objects, such as unwary astronauts, can fall through the event horizon into the black hole, but nothing can ever get out of the black hole through the event horizon. (Remember that the event horizon is the path in space-time of light that is trying to escape from the black hole, and nothing can travel faster than light.) One could well say of the event horizon what the poet Dante said of the entrance to Hell: “All hope abandon, ye who enter here.” Anything or anyone who falls through the event horizon will soon reach the region of infinite density and the end of time.

__________________________________

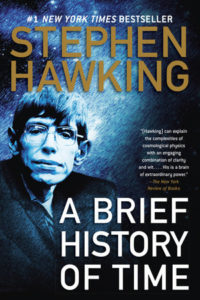

From A Brief History of Time: And Other Essays by Stephen Hawking. Copyright © 1988, 1996, 2017 by Stephen Hawking. Published by Ballantine Books, an imprint of Random House, a division of Penguin Random House LLC.

Stephen Hawking

Stephen Hawking was a theoretical physicist, cosmologist, professor, and Director of Research at the Centre for Theoretical Cosmology at the University of Cambridge. His publications include A Brief History of Time, Black Holes and Baby Universes and Other Essays, The Universe in a Nutshell, The Grand Design and My Brief History.