The Machines Are Coming, and They Write Really Bad Poetry

(But Don't Tell Them We Said So)

If and when the machines take over, it won’t be as we dreamt it. It won’t be a cold, homicidal smart speaker, or an albino android, or living tissue over a metal endoskeleton, shaped like an Austrian bodybuilder. We could’ve guessed they’d eventually beat us at stuff like chess. And Go. And competitive video games. But those are cold and calculating tasks, fit for machines. We told ourselves that they’d only ever be, well, computers: rigid, rational, unfeeling. The truly human features would always be ours. The warm gooey heart, which no algorithm could ever copy.

But in reality, the robots will be much more lifelike—and because of that, even more unsettling. They won’t sound robotic, because they’ll sound just like us. They might look just like us, too. They might have psychoses, and trippy, surrealist dreams. And someday soon, they might even write some decent verse.

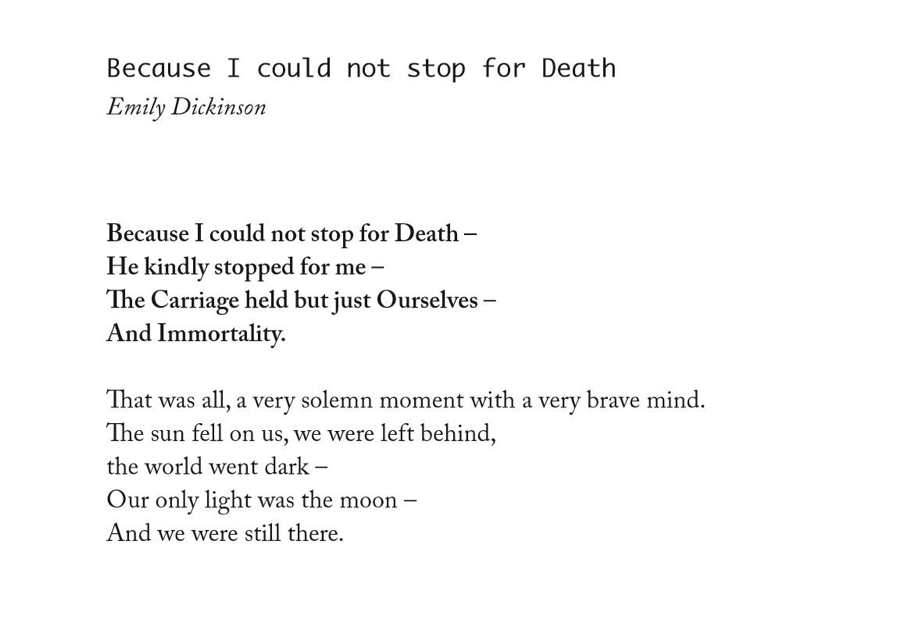

This is an AI-generated attempt to write in the style of Emily Dickinson. It’s produced by an artificial intelligence language program called GPT-2, a project of the San Francisco-based research firm OpenAI. The bolded portions represent the prompt given the program, while the rest is the program’s own; you can try it out for yourself, at this link. OpenAI only recently released the full code for GPT-2, after initially fearing that it would help amplify spam and fake news; if its fake poetry is any indication, debate over the power of AI language models might just be getting started.

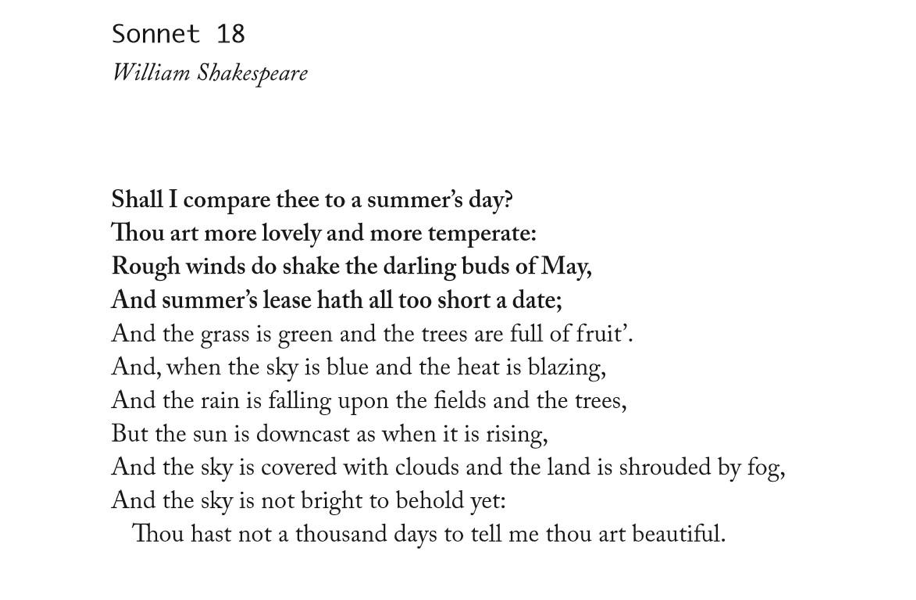

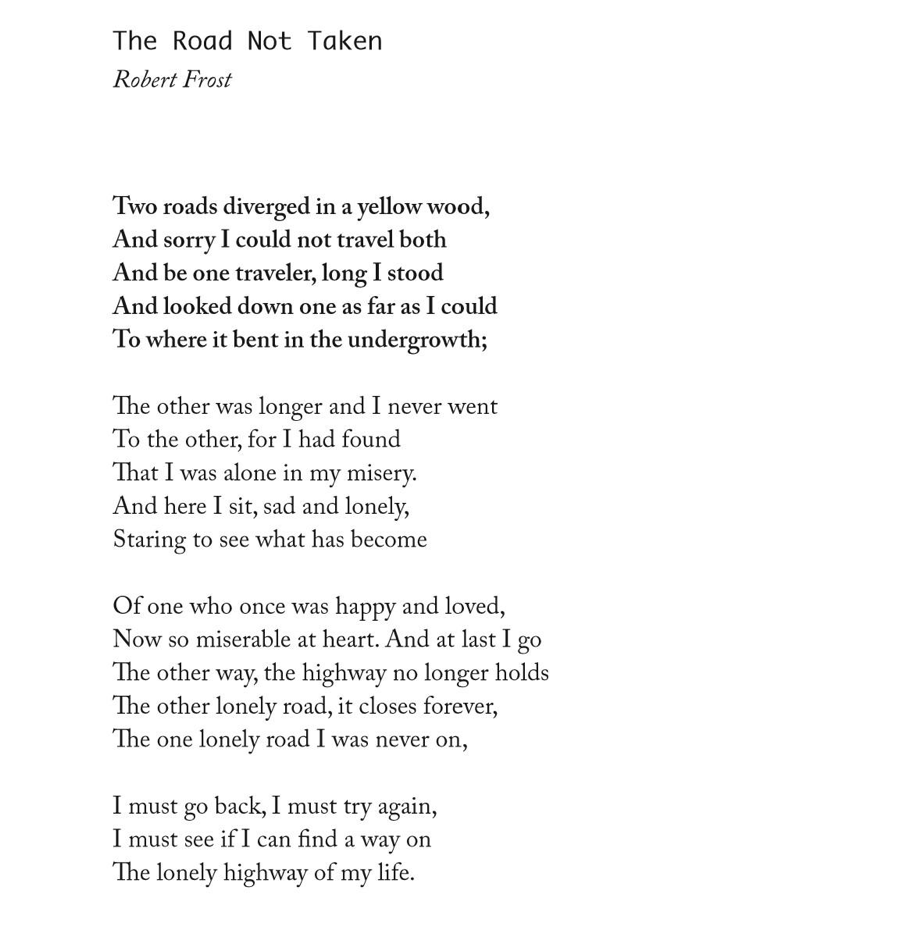

Using GPT-2, a group of Silicon Valley workers have, for our and their own amusement, compiled a collection of attempts by the AI to complete famous works of poetry. The resulting chapbook, Transformer Poetry, published by Paper Gains Publishing in December, is a tongue-in-cheek collection of surprisingly good and comically nonsensical computer-generated verse. No one will confuse it with human poetry just yet—or at least, you’d hope not. But in other ways, it’s also strikingly lifelike: an uncanny look at just how good inorganic authors might become, and the consequences that might come with it.

Computer programs have become much more like us as of late, in large part because they are increasingly modeled after our own minds. The booming field of machine learning—whose products are familiar to anyone who has used their smartphone’s voice assistant or image recognition—has been driven by the concept of the neural network, in which individual nodes, similar to neurons, “learn” to build a complex web of associations through trial and error. Where traditional programs are given rules that determine its outputs, neural nets are instead given the desired outcomes, from which they learn, through millions upon billions of repeated trials, their own ways to achieve them.

For its training, GPT-2 was given a corpus of 8 million webpages, chosen with a quintessentially internet-y method of natural selection: “In order to preserve document quality,” OpenAI’s post states, “we used only pages which have been curated/filtered by humans—specifically, we used outbound links from Reddit which received at least 3 karma.” Through trial and error, GPT-2 learned how to predict the rest of a piece of text, given only the first few words or sentences. In turn, this gave it a general method for completing other texts, regardless of content or genre.

At first glance, GPT-2’s capacity for imitation is impressive: the diction, grammar, and syntax are all leaps beyond what most of us would expect from a computer. But if you squint harder, the cracks immediately show. Its rendition of the most famous of Shakespeare’s sonnets immediately throws rhyme and meter out the window—but hey, most of us barely remember those rules, either. Lost, too, is the metaphor between the narrator’s beloved and a summer’s day, as the machine opts instead for a litany of images having to do with hot weather, followed by a sudden pivot into cloudy skies. And in lieu of Shakespeare’s concluding immortalization of beauty, we get an inversion so perfect it’s perverse: Tell me you’re beautiful, and make it quick!

Other attempts make sense, strictly speaking, but veer into unexpected results. Take “The Road Not Taken,” for example. While Frost’s original is really about the self-delusion that one’s choice “has made all the difference,” and most people remember it as a paean to rugged iconoclasm and taking the road “less traveled”—befitting its status as “the most misread poem in America”— GPT-2 somehow finds a third path, creating a narrator who is so racked with despair at having taken the wrong path that they desperately retrace their steps, only to find that the other road is shut.

GPT-2 has a tendency to take a piece of syntax and run with it, as it does here, spinning off the “I will” mode from the last verse it was given of Angelou’s poem and mutating it ad nauseum. It feels almost like amateur improv, the AI stalling as it tries to figure out what else to do. “Repetition is an easy thing to model,” says David Luan, VP of engineering at OpenAI. “Human writing tends to have just enough of it that the model learns that repeating things increases the likelihood of success.”

Luan also says that this is the result of a statistical method called top-k sampling, in which some fraction of the pool of possible next words is eliminated, in order to prevent the text from veering off topic. But these methods of avoiding egregious errors also, it seems, have the effect of amplifying certain tendencies to an absurd extreme.

Despite that, GPT-2’s poetic mind sometimes diverges much more inexplicably, creating a first-person narrator that doesn’t exist in the original, and generating thoughts that bear little relation to their source but are, nevertheless, oddly profound: “The last words of a man-made civilization / Are the words: ‘We are free.’” And in case you’re curious, none of those phrases show up in any online searches; whatever the program’s poetic sensibilities, they are not wholly unoriginal.

There’s a common thread here. GPT-2’s writing is grammatically correct. It all more or less sounds true to its source, if all you heard was the tone. But what those sequences mean, therein lies the rub. GPT-2’s poetry prizes style over substance. Which is understandable, because it doesn’t know what substance is.

Being a pure language model, GPT-2 has no knowledge of what words actually refer to, only the probability of a word appearing next to others. To it, a word like “chair” is just a string of characters, not a cluster of images or objects, let alone some more nebulous conceptual grouping of things that humans sit on. According to its makers, GPT-2’s most common lapses stem from this basic ignorance: “[W]e have observed various failure modes, such as repetitive text, world modeling failures (e.g. the model sometimes writes about fires happening under water), and unnatural topic switching.” Lacking any knowledge of referrents, GPT-2’s process instead works something like a semiotics without meaning—a game of only signifiers, without anything being signified.

In some ways, this feels similar to how humans develop and use language. Children frequently copy words and use them in grammatical sequences before they know what they’re saying. The mind of a writer, too, works in a haphazard and associative way, absorbing and regurgitating idioms and syntaxes, bits of aesthetically pleasing strings. But in the hands of a mature, human user, all these patterns are ultimately anchored to meaning—the goal of conveying a feeling or thought, not merely sounding like you are. A pretty turn of phrase means nothing, unless it means exactly something.

This is the real missing link between machines and literature: a knowledge of reality, the thing for which man made language to describe. Some even argue that a physical being is necessary to produce true intelligence—that no disembodied experience of the world will ever make a machine as sentient as we are. Clearly, the age of AI might come with significant risks—but it’s nice to know that for the time being, at least, our poetic soul is safe.