How, Exactly, Did We Come Up with What Counts As 'Normal'?

A Brief History of the Pseudoscience Behind the Myth of the "Average"

Where did normal come from, and why does it have the power it does in our lives, in our institutions, in our world? How did it become like air—invisible, essential, all around us? As Ian Hacking was the first to point out, look up normal in any English dictionary and the first definition is “usual, regular, common, typical.” How did this become something to aspire? How did everyone being the same achieve the cultural force it has?

There is an entire field of people who study this kind of stuff, and have written books about it. Madness and Civilization by Michel Foucault is a page-turner. The Normal and the Pathological by Georges Canguilhem is laugh-out-loud funny. Normality: A Critical Genealogy by Peter Cryle and Elizabeth Stephens should be on your summer vacation reading list. Enforcing Normalcy by Lennard J. Davis is a total life changer. These books, and others, have knocked normal off the pedestal and into the dirt. Because normal is contingent—on history, on power, and, most of all, on flawed humans faking it until they make it.

As these scholars have noted, the word normal entered the English language in the mid-1840s, followed by normality in 1849, and normalcy in 1857. This is shocking for a word that masquerades as an ever-present universal truth. When normal was first used it had nothing to do with people, or society, or human behavior. Norm and normal were Latin words used by mathematicians. Normal comes from the Latin word norma which refers to a carpenter’s square, or T-square. Building off the Latin, normal first meant “perpendicular” or “at right angles.”

Normal, however, even as a distinct word in geometry, is more complicated than it seems. On the one hand, normal is describing a fact in the world—a line may be orthogonal, or normal, or it may not. Normal is an objective description of that line. But a right angle, in geometry, is also good, is desirable, is a universal mathematical truth that many mathematicians, then and now, describe as a type of beauty and perfection. Here we see two facets of normal that are familiar to us now and make it so powerful. Normal is both a fact in the world and a judgment of what is right. As Hacking wrote, “One can, than, use the word ‘normal’ to say how things are, but also to say how they ought to be.”

A bunch of other words out there were looking to rival normal: natural, common, ordinary, typical, straight, perfect, and ideal. The list goes on. But here’s the thing—in the survival of the fittest, normal had a key advantage because it could mean more than one thing. Its ambiguity was its strength.

It’s scary to think, but it’s true: We have normal today not because of some deliberate process, or even an organized conspiracy, but because it worked better than other words. People started using normal in many different contexts and in many different ways because it was there, because it helped them do something, because other people were using it, because it rolled of the tongue, because it gave them power.

When normal was first used it had nothing to do with people, or society, or human behavior. It meant “perpendicular.”

So who used normal, and why, and how? Normal was first used outside a mathematical context in the mid-1800s by a group of men (gender pronoun alert—everyone in this history of normalcy is a man) in the academic disciplines of comparative anatomy and physiology. These two fields, by the 19th century, had professional dominion over the human body.

It was this crew that first used the word normal outside of a mathematical context, and eventually they used the term “normal state” to describe functioning organs and other systems inside the body. And why did they choose “normal state?” Who the hell knows? Maybe they found the conflation of the factual with the value-driven useful. Maybe there was a professional advantage in appropriating a term associated with mathematical rigor. (At the time, doctors weren’t hot shit the way they are now. A doctor’s cure for the common cold was leeches; headaches were alleviated by bleeding people, a treatment that killed many, which I guess is a kind of cure; and masturbation was “treated” with castration.) Or maybe they just liked the way it sounded. The historical record is unclear. But use it they did—with great abundance and little rigor—sort of like I do with all words in my ambitious pursuit of creative spelling.

For these guys, “normal state” was used to describe bodies and organs that were “perfect” or “ideal” and also to name certain states as “natural”; and of course, to judge an organ as healthy. I don’t blame them for using normal instead of perfect, ideal, natural, and all the other words they could have used. This wasn’t a grand conspiracy. So many words. So little time. I think they just got lazy, said screw it, normal will do. One word is better than five.

*

The anatomists and physiologists, however, never did find or define the normal state. Instead they studied and defined its opposite—the pathological state. They defined normal as what is not abnormal. But we absolutely have a proactive definition of normal today, don’t we?

Normal isn’t just not abnormal, but it is an upper-middle-class, suburban, straight, able-bodied, and mentally fit married white dude with 2.5 kids. Where did this statistic come from? Well, the 0.5 kid thing gives us an idea of where to start looking. You’ve never met 0.5 of a child because there is no such thing. The 0.5 children is an abstraction: take all the kids in the country, add them up, divide this by the number of families, and you have an average number of children per household. What is average, however, is often called normal—and what is called normal becomes the norm.

The anatomists and physiologists defined normal as what is not abnormal.

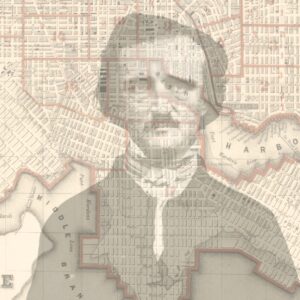

The idea of the average as normal goes way back to 1713 to a Swiss mathematician named Jakob Bernoulli, who many consider to be the founder of modern day calculus and statistics. He was obsessed with renaissance games of chance (i.e., gambling) and later became obsessed with developing a mathematical equation that would “tame chance” and calculate the odds of random events (i.e., winning or losing in dice). To figure this out, Bernoulli created an equation known as the calculus of probabilities, which became the foundation of all statistics. This was a big deal. The calculus of probabilities specifically, and statistics generally, made many seemly random events more predictable. With this new way of thinking, Bernoulli challenged and disrupted a deterministic view of the world. He even undermined the Church’s whole thing about divine creation and intervention, and perhaps, most important to him, gave people a way to win at craps.

Fast forward a hundred years and the calculus of probabilities gets taken up by Adolphe Quetelet and applied not to gambling but to human beings. Quetelet was the most important European statistical thinker of his time. He was, as were most of the normalists that came before and after him, ironically, an odd human. He was known to wax poetic about statistical laws and their beauty, and often described finding a mean in a data set in ecstatic terms.

Quetelet was a true believer that statistics should be applied to all aspects of society. He wasn’t content to predict which numbered ball would roll out of a slot or how many times heads or tails would come up in a coin toss. In 1835, he put forth the concept of the “average man.” His plan was to gather massive amounts of statistical data about any given population and calculate the mean, or most commonly occurring, of various sets of features—height, weight, eye color—and later, qualities such as intelligence and morality, and use this “average man” as a model for society.

Quetelet was fuzzy on whether he believed that the average man was a real person. On the one hand, he did make many statements about the average man as statistical abstraction. On the other, later in his career, he got more into the idea that there were “types” of humans as a result of a study on the features of Scottish soldiers (Racism alert: unsurprisingly, he found in this study that black people weren’t “normal.”) He did make claims that the ideal type could be found in an actual person (though he was thinking of someone like Ewan Macgregor). Regardless, Quetelet really believed that “the average man” was perfect and beautiful. The average man was no Homer Simpson—average, as in typical—but rather a model human being who should guide society.

If the average man were perfectly established, one could consider him as the type of beauty. . . . everything that was furthest from resembling his proportions or his manner of being would constitute deformities or illnesses; anything that was so different not only in its proportions but in its form, as to stand outside the limits observed, would constitute monstrosity.

I find it deeply ironic that even here, in the so-called objectivity of numbers and facts, there lingers the wish for something better than real life. Something greater than ourselves. There is always a dream of self-transcendence, and in that dream, a reality of self-negation. Somewhere, in all of us, we wish to be other than we are.

Quetelet’s idea of the average man became the normal man. He used regular, average, and normal interchangeably. In 1870, in a series of essays on “deformities” in children, he juxtaposed children with disabilities to the normal proportions of other human bodies, which he calculated using averages. The normal and the average had merged, as explained in Normality: A Critical Genealogy: “The task of statistics was principally to establish just what those normal proportions were, and the job of a therapeutic medical science was to do all that it could to reduce the gap between the actual and the normal/ideal.”

How is it helpful for anything, really? Because, even according to Quetelet, the average man is the impossible man.

But not everyone was feeling what Quetelet was throwing down. He was booed off the stage at many medical symposiums and shunned by the emerging public safety apparatus in France. Often Quetelet’s averages weren’t representative averages at all. For example, when he calculated the average age of a population, he took out all the kids. When studying what was “natural” for women, he used data from men. It’s as if he found that cats are the average pet by only averaging people who had cats. The most damming critique was pretty simple: the average man doesn’t exist, by his admission. It is a statistical fiction, so how is the concept of the average man helpful to being a doctor, running a government or school, or living a good life? How is it helpful for anything, really? Because, even according to Quetelet, the average man is the impossible man.

For all Quetelet’s talk of the importance of means and averages, and his conflation of the two with normal, he never argued that the average man was a real person. He got close with his Scottish “type” thing. And who can blame him? Those accents are rad. But he backed off and argued that the average man was only a useful statistical construct for understanding the world for people in government and other professionals. It wasn’t something to actually be.

While Quetelet laid the groundwork, no one is more responsible for the modern usage of normal than a man named Francis Galton. Galton was Charles Darwin’s cousin, began his career as a doctor, and then left medicine for the emerging field of statistics. As Lennard Davis described in his book Enforcing Normalcy, Galton made significant changes in statistical theory that created the concept of the norm, as we know it.

While these changes are mathematically complex, here is the CliffsNotes summary: Galton was into the idea of improving the human race and believed that statistics could help. He loved Quetelet’s whole “average man” thing but had one minor problem. In the center of Quetelet’s bell curve were the most commonly occurring traits, not the ideal bodies and minds Galton believed everyone should have. To solve this problem, Galton, through a complicated and convoluted mathematical process (the technical definition of statistics), took the bell curve idea, where the most common traits clustered in the middle and the extremes, and created what he called an “ogive” (he had a habit of making up words) which, as Davis explains “is arranged in quartiles with an ascending curve that features the desired trait as “higher” than the undesirable deviation.” He called this the “normal distribution curve,” and it made the most commonly occurring differences that Galton did not value into deficiencies, and the uncommon ideal bodies and minds that he did value… normal.

This was a big deal. According to Peter Cryle and Elizabeth Stephens, authors of Normality: a Critical Genealogy, “Galton was not only the first person to develop a properly statistical theory of the normal . . . . but also the first to suggest that it be applied as a practice of social and biological normalization.” By the early twentieth century, the concept of a normal man took hold. The emerging field of public health loved it. Schools, with rows of desks and a one-size-fits-all approach to learning, were designed for the mythical middle. The industrial economy needed standardization, which was brought about by the application of averages, standards, and norms to industrial production. Eugenics, an offshoot of genetics created by Galton, was committed to ridding the world of “defectives” (more on this later) and was founded on the concept of the normal distribution curve.

The conflation of the “average” man as normal was a significant step in the history of normality. Statistics did not discover the normal, but invented the normal as that which should occur most often. According to Cryle and Stephens, this was the exact time in history when “a brand of social knowledge grounded in mathematics was asserting that the average mattered more than the exceptions.” This was a big deal, especially to those who would later find themselves on the wrong side of normal. As Alain Desrosières, a renowned historian of statistics wrote, with this power play by statistical thought the diversity inherent in living creatures was reduced to an inessential spread of “errors” and the average was held up as the normal—as a literal, moral, and intellectual ideal.

––––––––––––––––––––––––––––––––––––––––

From Normal Sucks: How to Love, Learn, and Thrive Outside the Lines. Used with the permission of the publisher, Henry Holt. Copyright © 2019 by Jonathan Mooney.

Jonathan Mooney

Jonathan Mooney’s work has been featured in The New York Times, The Los Angeles Times, The Chicago Tribune, USA Today, HBO, NPR, ABC News, New York Magazine, The Washington Post, and The Boston Globe, and he continues to speak across the nation about neurological and physical diversity, inspiring those who live with differences and advocating for change. He is author of The Short Bus, Learning Outside the Lines, and Normal Sucks.